Summary tab

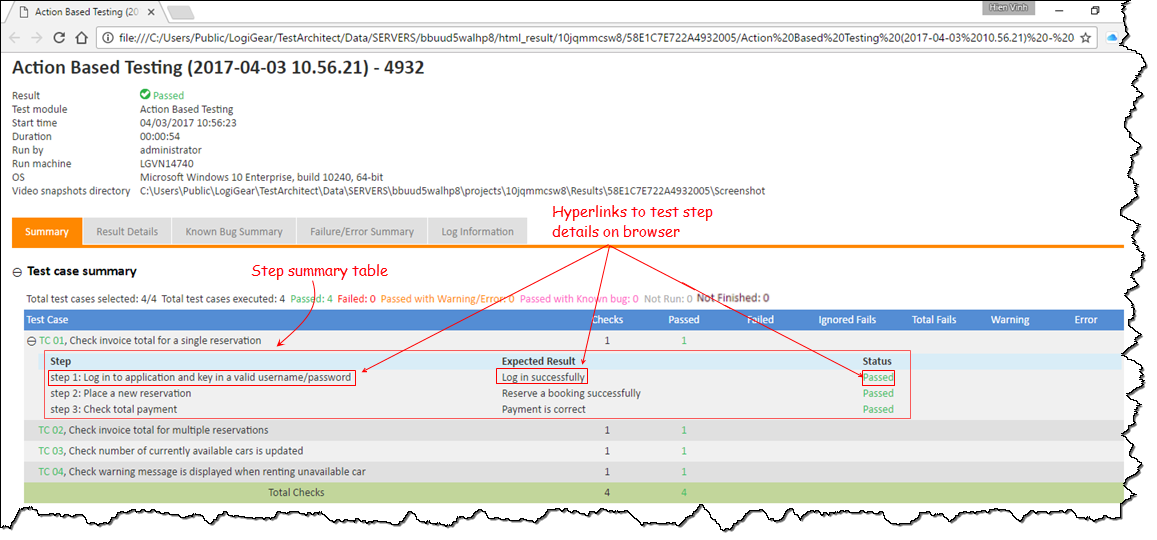

The test result Summary tab reports basic test run information which summarizes various aspects of the test. A summary report represents the results of a single run of one test module.

Test result summaries are displayed on the test result Summary tab, and the various summaries it contains are grouped into sections.

General Information section

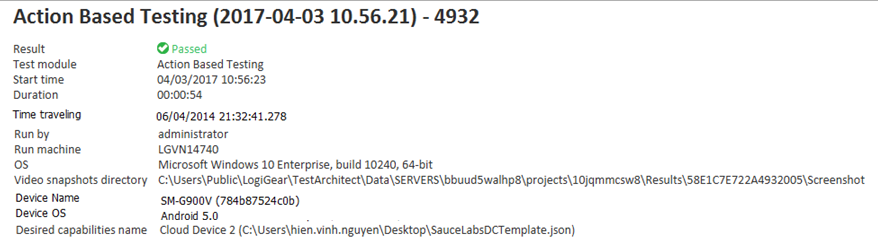

The top of the Summary tab lists such items as follows:

Table 1. General information

| Field | Description |

|---|---|

| Result | Result of the test execution |

| Test module | Name of the executed test module |

| Revision timestamp | Timestamp of the executed revision of the test module |

| Start time | Start time of the test execution |

| Duration | Duration of the test execution |

| Time traveling | Selected timestamp when time traveling is implemented for the run, and the executed test module is checked in. (Learn more.) |

| Run by | User who runs the test |

| Run machine | TestArchitect controller executing the test |

| OS | OS name of the TestArchitect controller executing the test |

| Video snapshots directory | The location at which screen-recording videos (taken during the automated test runs) are stored. |

| Device Name | Name of the target physical mobile device |

| Device OS | OS name of the target physical mobile device |

| Desired capabilities name | Name of the target cloud mobile device and full path to the JSON file, which defines target cloud devices (learn more) |

The test result name consists of:

- test module name;

- if part of a serial test run, the test module’s chronological position in the test run, in parentheses;

- execution timestamp, in the format: (yyyy-mm-dd hh.nn.ss), where nn=minutes;

- – (hyphen);

- process id of the test module’s run, received from the O/S. (Used to ensure a unique name for the result, in the event that two instances of the given test module’s execution happen to have identical timestamps.)

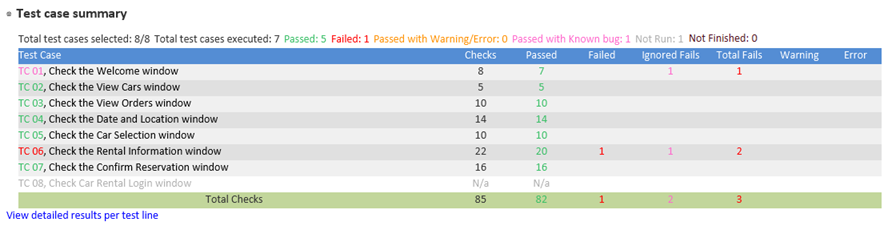

Test Case Summary section

This section displays the executed test cases, skipped test cases (if any) and their test results in terms of passed, failed, passed with warning/error, passed with known bug, not run, and not finished.

Table 2. Test case summary

| Field | Description |

|---|---|

| Total test casts selected | The number of test cases selected to be executed. |

| Total test cases executed | The number of test cases that were actually executed. |

| Passed | The number of passed test cases. |

| Failed | The number of failed test cases. |

| Passed with Warning/Error | The number of test cases that passed, but at least one warning or error was detected. |

| Passed with known bug | The number of test cases that failed, but for which each failed check was already marked as a known bug. |

| Not Run | The number of test cases that were skipped during execution, because the automated test was halted. Note that, when a test case is skipped, its detailed information has the N/a text. |

| Not Finished | The number of test cases that were unable to reach the end of an automated run. |

| Test Case | A list of all executed test cases. Note that each test case name listed in the leftmost column of this list is hyperlinked, so that clicking on it displays the result details on a web browser. |

| Checks | The number of checkpoints (check actions) in each test case. |

| Passed | The number of checkpoints with passed results in each test case. |

| Failed | The number of checkpoints with ordinary failed results in each test case. |

| Ignored Fails | The number of failed checkpoints that are marked as known bugs in each test case. |

| Total Fails | The number of ordinary failed checkpoints plus the number of ignored failed checks in each test case. |

| Warning | The number of checkpoints producing automation warnings in each test case. |

| Error | The number of checkpoints resulting in automation errors in each test case. |

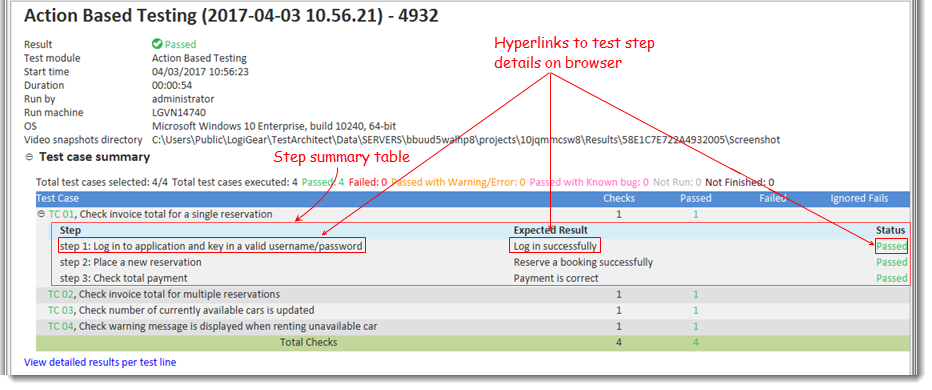

Step Summary table: With the support of this table, you might take a quick glance at which test steps have automation problems. Also you might grab the test flow thoroughly when you can identify the exact test steps in which the failures, errors, or warnings occur.

Figure: Step Summary table on TestArchitect Client

Figure: Step Summary table on a web browser

- This table is only available when you create test steps.

- Click

, next to the test case’s title, to expand and view Step Summary table for each test case.

, next to the test case’s title, to expand and view Step Summary table for each test case.

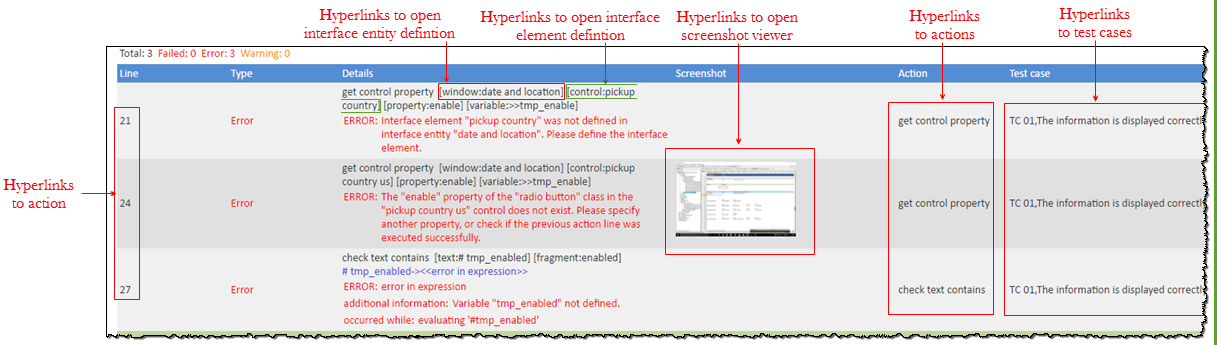

Failure/Error Summary section

The section exhibits a list of the failures occurring during the test run. For more details, please refer to Failure/Error Summary tab.

Known Bug Summary section

This section displays details about known bugs marked on action lines. For more details, please refer to Known Bug Summary tab.

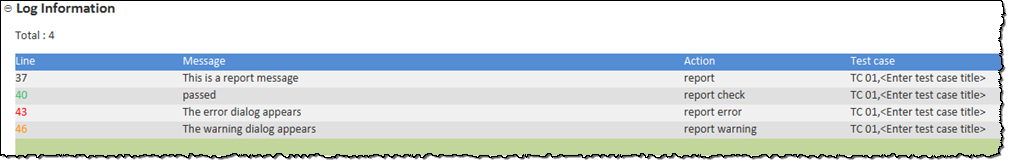

Log Information tab section

This section displays the logging information from the report actions, that is, report, report check, report warning, and report error in the test procedure.

For more details, please refer to Log Information tab.

Additional functions

The Summary tab also has an icon bar from which you can conveniently activate functions such as deleting and exporting test results, setting and comparing baselines, etc. This quick-access icon bar is located in the upper-right hand corner of the Summary tab.

Based on types of test result, that is, local or repository, available options may vary.

Available options for a local result:

- Locate the test result in the result tree node.

- Submit bug to an external bug tracking system, such as JIRA.

- Attach the test results to a JIRA bug.

- Delete test result

- Add local test result to repository.

- Resolve unverified picture checks.

- Export test result to an HTML file.

- Compare to baseline.

Available options for a repository result:

- Locate the test result in the result tree node.

- Submit bug to an external bug tracking system, such as JIRA.

- Attach the test results to a JIRA bug.

- Delete test result

- Export test result

- Resolve unverified picture checks.

- Export test result to an HTML file.

- Set as baseline

- Compare to baseline.